Welcome! I’m passionate about building and leading high-performing engineering teams. Here you’ll find my thoughts on management, technology, and building a thriving engineering culture.

Recent Engineering

Automating the Data Deluge: Four Real-World Architectures with Azure Storage Actions

Managing data at scale is one of the biggest challenges in modern cloud architecture. As data volumes grow from terabytes to petabytes, manual operations become impossible, and simple scripts become brittle and insecure. Azure Storage Actions, a serverless framework for automating data management in Azure Storage, offers a powerful solution. It provides a no-code, scalable, and secure way to handle operations like lifecycle management, data organization, and policy enforcement across billions of objects.

Semantic Kernel and AutoGen: Microsoft stack for Multi-Agent AI

Discover how Microsoft’s two key frameworks, Semantic Kernel and AutoGen, provide a powerful stack for creating sophisticated multi-agent AI systems. This article explores how to build specialized agents with Semantic Kernel and orchestrate their collaboration with AutoGen, while also comparing this approach to leading alternatives like CrewAI and LangGraph.

Book Review: Unlocking Data with Generative AI and RAG

I recently finished reading “Unlocking Data with Generative AI and RAG” by Keith Bourne, and it provides a comprehensive overview of building systems that can leverage large language models (LLMs) with your own private or new data. This post summarizes my key takeaways from the book.

The core idea is that the key technical components of a Retrieval-Augmented Generation (RAG) system include the embedding model that creates your embeddings, the vector store, the vector search mechanism, and the LLM itself.

AI Workflow Automation with Personal MCP server

Engineering managers operate at the intersection of numerous information streams, leading to significant context-switching overhead and potential loss of critical information. This post outlines the design for a context-aware AI assistant, orchestrated by the Gemini CLI, to solve this problem. We will explore two solutions: a short-term workaround using a command-line bridge and the recommended long-term solution involving a dedicated local server.

AI Workflow Automation for an Engineering Manager

The life of an engineering manager is a constant exercise in context switching. Between processing a flood of emails, keeping up with Slack channels, managing Jira tickets, reading Confluence pages, and preparing for one-on-one meetings with notes in OneNote, the mental overhead can be staggering. The core challenge isn’t just managing tasks; it’s about maintaining and retrieving context across a dozen different platforms, often in parallel.

Agentic Features for Codehub: Engineering Metrics Intelligence (part 2 of 2)

Enhancing Codehub application with agentic capabilities can transform it from a static reporting tool into an intelligent engineering insights platform that proactively helps teams improve their performance and productivity.

See also Agentic Features for Codehub: Engineering Metrics Intelligence (part 1 of 2)

Agentic Features for Codehub: Engineering Metrics Intelligence (part 1 of 2)

Integrating agentic features into Codehub app is an excellent way to enhance its value proposition, moving it from a data visualization tool to an intelligent assistant for engineers and managers. In this article I’m brainstorming (with AI) several agentic features, categorized by the problems they solve and the personas they target.

Agentic Applications

Agentic applications represent a significant leap in AI, moving beyond simple chatbots to systems that can autonomously reason, plan, and execute multi-step tasks to achieve a defined goal. They are designed to operate with minimal human intervention, continuously learning and adapting over time.

My First Steps into Agentic Coding with VS Code

I recently embarked on an interesting experiment with GitHub Copilot Agent that has given me some valuable insights into the evolving landscape of software development. I thought it would be worth sharing my experience and observations.

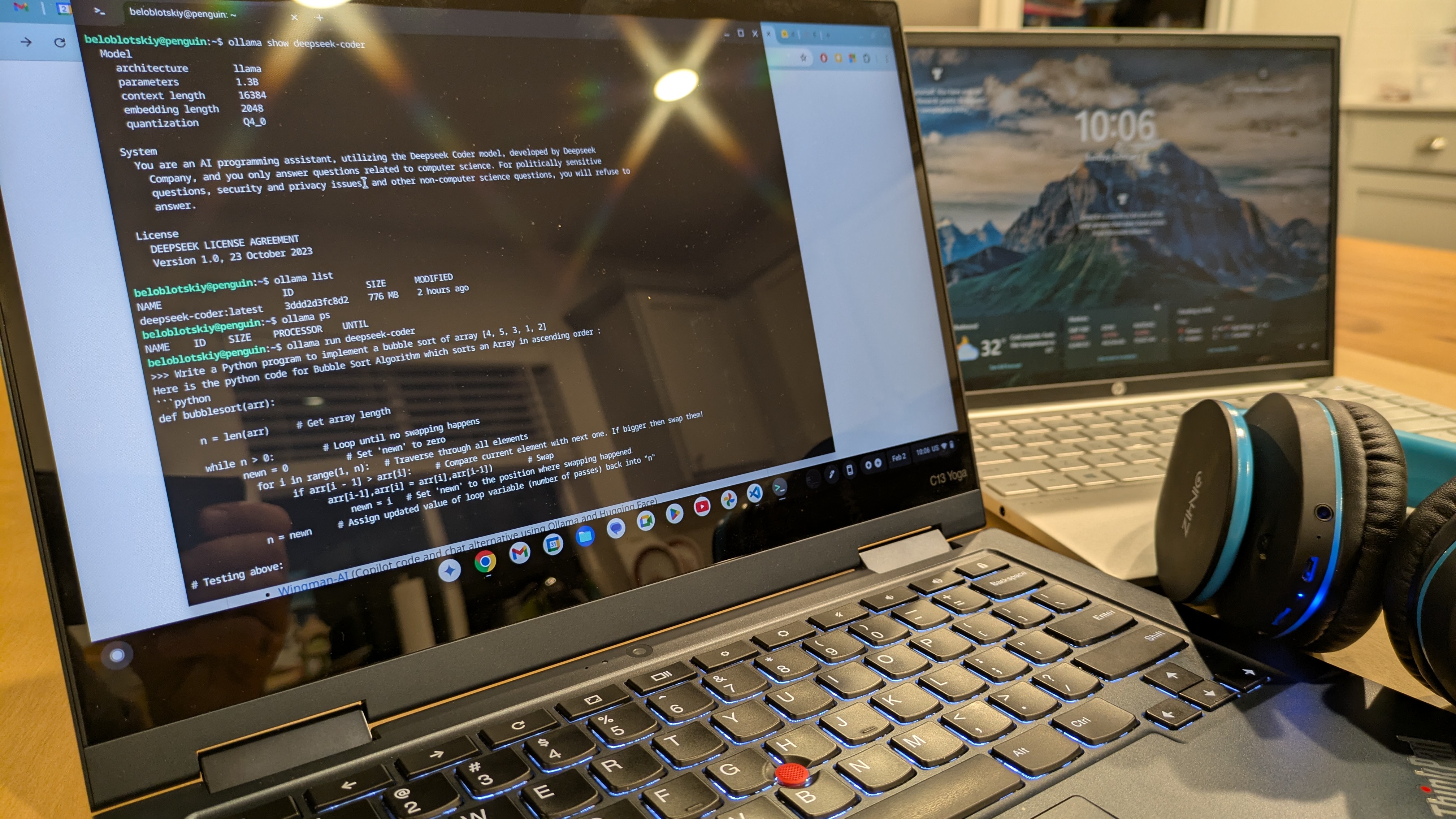

Offline LLM Inferencing

When it comes to running LLMs locally (or offline - i.e., not in the cloud), we have several tools to choose from. Coming from the “cloud-native” services, I was quite surprised by their popularity. However, after observing the rapid cloud cost accumulation, I decided to do a little research on the subject.

Probably the best article on the subject is https://getstream.io/blog/best-local-llm-tools/ - this is a great one-stop-shop to start your offline inferencing with open-source models.

Tools for Offline LLM Inferencing:

- Ollama

- Llamafile / Executable Linkable Format (ELF)

- Jan

- LM Studio

- LLaMa.cpp

Engineering excellence metrics

The Engineering Excellence is a measurable efficiency of the dev piline described in PR lifecycle

Sorry, this articale is not finished yet, but the core idea should be visible from here:

- Commits in all PRs

- Commits to all PRs ratio

- Updated Open PRs

- Closed (not Merged) PRs

- Abandoned PRs ratio

- Merged PRs

- Merged PRs Avg. Duration

- Merged PRs Additions (+lines)

- Merged PRs Deletions (-lines)

- Merged PRs Changed Files

- Merged PRs → main

- Merged PRs → main ratio

- AutoCRQ Deployments

- AutoCRQ PRs Lead Time

PR lifecycle

PR lifecycle

In our group we follow simple yet effetive PR workflow: poly-repo setup with a simplified GitHub process. Basically we use main branch with automatic staging deployment and ability to promote a build to the production environment. The promotion script automatically creates a CRQ (a change record in ServiceNow), that we call “auto-CRQ” since it doesn’t requite manual steps (approvals), unless the site status is not green.